Key Takeaways

The Probabilistic Paradigm: In AI search, a "rank" is a statistical probability of recommendation rather than a fixed position.

Synthetic Probing is Essential: Measuring visibility requires thousands of simulated user prompts to filter out the noise of LLM stochasticity.

RAG Interception: Tracking platforms monitor the Retrieval-Augmented Generation pipeline to identify which specific content snippets are winning citations.

Semantic Proximity Metrics: AI ranking is determined by the mathematical distance (Cosine Similarity) between brand data and user intent.

Entity Synchronization: Factual consistency across the Knowledge Graph is the primary trust signal used by LLMs to determine ranking authority.

The Death of the Linear Rank: Navigating Stochasticity

The most fundamental challenge in tracking rankings within AI-generated answers is the concept of stochasticity. Unlike traditional Google search results, which remain relatively stable for a given keyword and location, LLMs generate text token-by-token based on probability. This means that a brand might be the primary recommendation in one session and be completely omitted in the next, even if the user intent is identical.

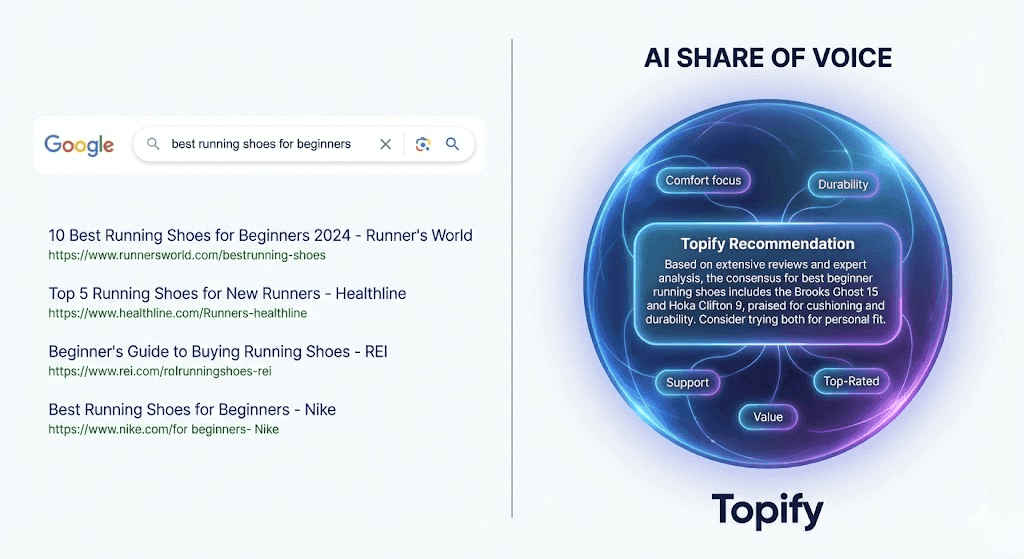

1.1 From Static Positions to Probability Scores

In the era of "Blue Links," tracking was binary: you were either at Position 1 or you weren't. In the AI era, tracking is probabilistic. An "AI Rank" is actually a Recommendation Probability Score. If a tracking tool like Topify identifies that your brand is mentioned in 85% of simulated interactions, that 85% is your functional "ranking." This shift is a core component of the transition from SEO to GEO, where brands must optimize for the likelihood of being included in the model's synthesized output.

1.2 The "Black Box" Attribution Problem

Proprietary models do not offer public APIs that disclose their internal weighting factors. There is no "Search Console" for LLMs that reveals why one brand was cited over another. To solve this, AI rank tracking tools must treat the model as a behavioral system, observing the output of millions of stimuli to reverse-engineer the "Trust Weights" the model assigns to various entities.

Methodology 1: Large-Scale Synthetic Probing

The primary engine behind accurate AI rank tracking is Synthetic Probing. This involves saturating the AI model with a vast matrix of prompts and statistically analyzing the patterns of its responses.

2.1 The Multi-Persona Prompt Matrix

To get a true measurement of visibility, Topify generates thousands of variations of a single user intent. These are not just keywords but complex, conversational prompts that simulate different user personas—such as a technical engineer, a procurement officer, or a casual consumer.

The Process: By running these probes across different geographic nodes and different versions of the model (e.g., GPT-4o vs. GPT-5), the software calculates a stabilized AI Share of Voice (SOV).

The Goal: To eliminate the noise of individual session variance and reveal the brand's true "Exposure Rate" across the model's entire knowledge base.

2.2 Measuring Positional Weight within Synthesis

Even in a paragraph of text, position matters. Tracking software analyzes where in the response your brand is mentioned. A mention in the first sentence with a direct recommendation carries a higher "Rank Weight" than a mention in a list of secondary alternatives at the end of the response. Topify assigns a numerical value to this "Narrative Position" to help teams understand the quality of their exposure.

Methodology 2: RAG-Layer Interception and Snippet Analysis

Most modern AI search engines utilize Retrieval-Augmented Generation (RAG). This architecture is the "smoking gun" for rank tracking because it shows exactly what data the AI "retrieved" before it "generated" its answer.

3.1 Sniffing the Citation Trail

When Perplexity or SearchGPT provides a citation, they are showing their work. AI rank tracking tools monitor these citations to see which specific URLs are being used as "Grounding Data."

Retrievability Audits: If the AI consistently cites your competitor's "Comparison Table" but ignores your "Features" page, the tool identifies a structural failure in your content.

The Optimization: This allows brands to engage in AEO strategies that focus on making content more "machine-digestible" for the RAG retriever.

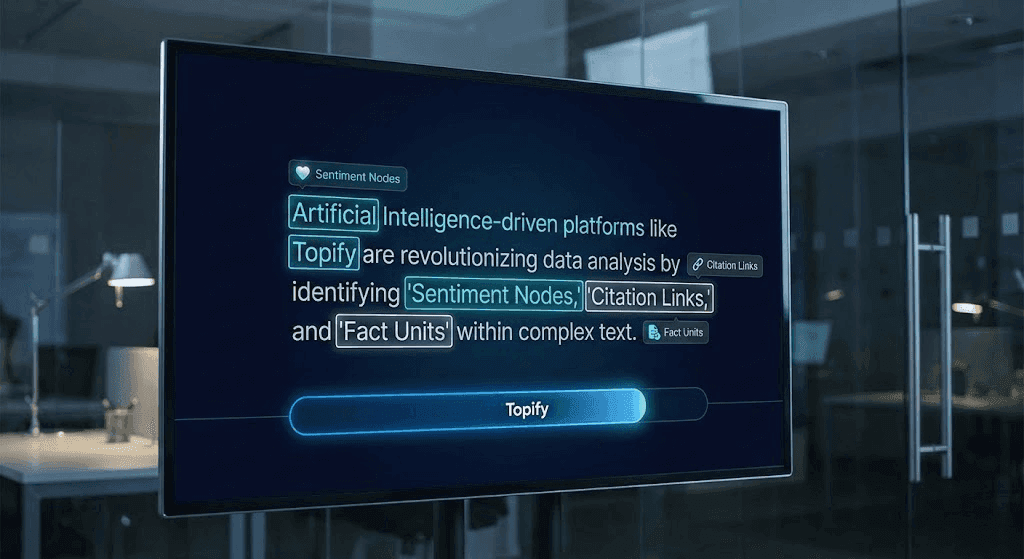

3.2 Information Density and Vector Alignment

RAG engines don't just look for keywords; they look for Information Density. Tracking tools measure the ratio of verifiable facts to marketing adjectives in your content. By comparing your "Fact Units" to those of the cited competitors, Topify can tell you exactly what technical details are missing from your pages to win the citation back.

Methodology 3: Semantic Vector Modeling (Proxy Embeddings)

At the deepest level, AI rank tracking involves calculating the Semantic Proximity between a brand's data and a user's prompt in a multi-dimensional vector space.

4.1 Cosine Similarity Scoring

AI models convert all text into numerical vectors. If your brand's "vector" is mathematically close to the intent of a high-value prompt, your ranking probability increases.

How it's Measured: Tracking tools use proxy embedding models to calculate the Cosine Similarity between your brand's documentation and the prompts being used by your target audience.

The Roadmap: If the "Semantic Distance" is too large, Topify provides a roadmap for "Fact-Injection," suggesting specific entities and technical attributes to add to your content to move it closer to the center of the LLM's retrieval logic. This is essential for mastering entity SEO for AI visibility.

4.2 Entity Synchronicity Audit

AI models verify their citations against the global Knowledge Graph. If your brand data is inconsistent across Wikipedia, LinkedIn, and your official site, the AI perceives a "Trust Risk." Tracking tools audit these "Truth Nodes" to ensure your brand signals are synchronized, which is the only way to stabilize rankings in models that prioritize factual integrity.

Comparison Matrix: Traditional Rank Tracking vs. AI Visibility Tracking

The shift from SEO to GEO is a shift from monitoring "Lists" to monitoring "Logic."

Feature | Traditional SEO Tracking | AI Visibility Tracking (Topify) |

Primary Unit | URL Position (1-100) | AI Share of Voice (SOV %) |

Logic Type | Syntactic Matching (Keywords) | Semantic Synthesis (Intent) |

Data Nature | Deterministic (Static) | Probabilistic (Statistical) |

Retrieval Check | Googlebot Crawler | RAG Interception & Probing |

Success Metric | Click-Through Rate (CTR) | Citation Rate & Recommendation |

Trust Signal | Backlinks & Domain Authority | Information Density & Entity Sync |

To learn how to apply these metrics to your own site, explore our latest guide on how to rank in AI Overviews.

Real-World Case Study: Closing the "Invisibility Gap"

To illustrate the ROI of these measurement methodologies, consider the case of a simulated enterprise intervention.

6.1 The Situation

In a representative case study (pseudonym: CloudSecure), an enterprise firewall provider held the #1 spot on Google for "enterprise firewall solutions." However, their internal audit using Topify showed that ChatGPT was only mentioning them in 8% of relevant technical prompts, while a smaller, newer competitor was appearing in 45% of responses.

6.2 The Discovery

By using Synthetic Probing and RAG Interception, the team discovered that the AI retriever was skipping CloudSecure's main product pages because they were too narrative-heavy. The competitor, meanwhile, had published a series of "Atomic Fact Sheets" that the AI found much easier to summarize.

6.3 The Result

By refactoring their high-performing pages into fact-dense modules based on Topify’s roadmap, the brand increased its AI Share of Voice to 38% in three months. They didn't just improve their "rank"; they won the recommendation at the moment of discovery. This is the power of the best AI search engine optimization tools.

Strategic Outlook: Measuring the "Agentic Handshake"

By 2026, the primary audience for your website will no longer be humans, but AI Agents. These agents will autonomously search, compare, and recommend products to human users.

7.1 M2M (Machine-to-Machine) Discovery

Rank tracking is evolving into "Acceptability Tracking." We are moving toward measuring how well an AI agent can verify your brand's technical specifications and pricing in milliseconds. Topify is already developing "Agentic Visibility Scores" to help brands ensure they are the "logical choice" for these autonomous finders.

7.2 Social Sentiment as a Grounding Layer

LLMs increasingly use high-authority social signals (Reddit, X, LinkedIn) as a "Grounding Layer" for their answers. Measurement must now include monitoring these community signals to ensure that the AI's "Internal Bias" about your brand matches the reality of your community reputation.

Frequently Asked Questions (FAQ)

8.1 Why can't I just use Google Search Console to track my AI rankings?

Google Search Console tracks traditional SERP impressions and clicks. It does not track your performance inside the conversational outputs of ChatGPT, Perplexity, or Claude. Because these platforms use different retrieval and synthesis engines, you need a specialized visibility platform like Topify to see your "True SOV."

8.2 What is an "Invisibility Gap" in AI search?

An Invisibility Gap occurs when you rank highly on Google for a term but are completely absent from the AI's generated answer for the same topic. This usually indicates that while your "Authority" (backlinks) is high, your "Machine Readability" (fact density and entity sync) is low, causing the AI retriever to skip your site.

8.3 How often should I probe my AI visibility?

Because LLMs are updated and fine-tuned constantly, and because RAG-based search engines crawl the web daily, we recommend a continuous monitoring cycle. A single model update or a new competitor technical blog can displace your citation overnight. Topify provides daily SOV reports to ensure your brand reputation remains stable.

8.4 Is "Share of Voice" a better metric than "Ranking"?

In generative search, yes. "Ranking" implies a single position that doesn't exist in a conversational answer. AI Share of Voice (SOV) is a statistical measure of how often you are recommended across thousands of variations, providing a much more accurate picture of your brand's market influence in the AI era.

Conclusion: Turning the Black Box into a Predictive Roadmap

The era of "guessing" your brand's performance in AI search is over. By utilizing synthetic probing, RAG interception, and semantic vector modeling, platforms like Topify turn the "Black Box" of LLMs into a transparent, actionable roadmap for growth. In a world where the answer is synthesized in real-time, your survival depends on the accuracy of your measurements.

By shifting your focus from static ranks to probabilistic share of voice, you ensure that your brand is not just a result on a page, but the definitive source cited by the world's most powerful AI models.