In the current landscape of digital marketing, the most influential gatekeepers of information—OpenAI, Google, and Anthropic—maintain "Black Boxes." Their Large Language Models (LLMs) are proprietary, their internal weights are secret, and their real-time ranking logic is obscured by billion-dollar corporate firewalls. This lack of transparency poses a massive challenge for enterprises: How do you optimize for a system that refuses to show you its rules?

The answer lies in the shift from Access to Observation. Just as astronomers can map distant galaxies by observing gravitational effects without ever visiting them, AI visibility tracking software like Topify maps the "internal logic" of LLMs by observing their outputs across millions of simulated scenarios. This 2,000-word guide provides a comprehensive technical teardown of the methodologies used to track brand visibility in the generative search era, explaining how we move from "Black Box" uncertainty to data-driven strategic clarity.

Key Takeaways

Behavioral Probing: Tracking is achieved by saturating the model with millions of "Synthetic Probes" to statistically determine its recommendation patterns.

RAG-Layer SNIFFING: By monitoring the citation trail in search-enabled models like Perplexity, software can reverse-

engineer which brand assets the AI perceives as high-authority sources.

Vector Proximity Modeling: Platforms use proxy embedding models to calculate the "Semantic Distance" between your content and high-intent user prompts.

Probabilistic Metrics: Success is quantified by AI Share of Voice (SOV), a probabilistic measure of brand dominance across diverse prompt sets.

Strategic Roadmap: By identifying "Invisibility Gaps," platforms like Topify provide the actionable intelligence required to fix misrepresentations and secure citations in synthesized answers.

The Challenge: Navigating the Non-Deterministic "Black Box"

To understand how tracking works, we must first accept that LLMs are non-deterministic. Unlike a Google Search result, which remains relatively stable for a specific keyword and location, an AI answer is a probabilistic "synthesis."

1.1 The Stochasticity Problem

If you ask ChatGPT the same question twice, the phrasing might change. If you ask it 1,000 times, you will see a distribution of answers. Traditional SEO tools, designed to track static URL lists, are fundamentally incapable of measuring this variation. This shift is why moving from SEO to GEO is a technical requirement for modern brands.

1.2 The Privacy Barrier

LLM providers do not offer a "Search Console" for brands. There is no API that reports "how often you were mentioned last week." Tracking software must therefore rely on Empirical Observation—treating the AI as a stimulus-response system.

Methodology 1: Large-Scale Synthetic Probing

The primary mechanism for tracking visibility without internal access is Synthetic Probing. This is the process of sending millions of unique, high-intent prompts to the model and statistically analyzing the output.

2.1 The Prompt Matrix

Topify maintains a vast library of "Prompts" that represent the entire customer journey.

Syntactic Probe: "Who are the leaders in cloud security?"

Semantic Probe: "I need a scalable security solution for an AWS-based fintech firm with strict SOC2 requirements."

2.2 Persona and Geographic Simulation

Because AI models can provide different answers based on perceived user context, tracking software simulates diverse personas and locations. By aggregating these results, the software builds a high-resolution map of your brand's AI Share of Voice (SOV). If your brand is mentioned in 80% of probes for a specific category, the model has high "Implicit Trust" in your brand entity.

Methodology 2: RAG-Layer Sniffing and Attribution

Most modern AI search engines, such as Perplexity and SearchGPT, utilize Retrieval-Augmented Generation (RAG). This is a multi-step pipeline where the AI retrieves snippets from the live web before generating an answer.

3.1 Intercepting the Citation Trail

When an AI provides an answer with a citation, it is showing its "work." Tracking software monitors these citations at scale to see which specific URLs are being retrieved.

The Insight: By analyzing which pages are being fetched, Topify can tell you which of your content modules are effectively serving as "Grounding Data" for the LLM.

3.2 Information Density Audits

AI retrievers prioritize content with high Information Density. Tracking software analyzes the "winning" citations of your competitors to see why the RAG engine chose them over you. Often, it comes down to a higher ratio of verifiable facts to marketing adjectives. This is a core pillar of what is AEO.

Methodology 3: Semantic Vector Modeling (Proxy Embeddings)

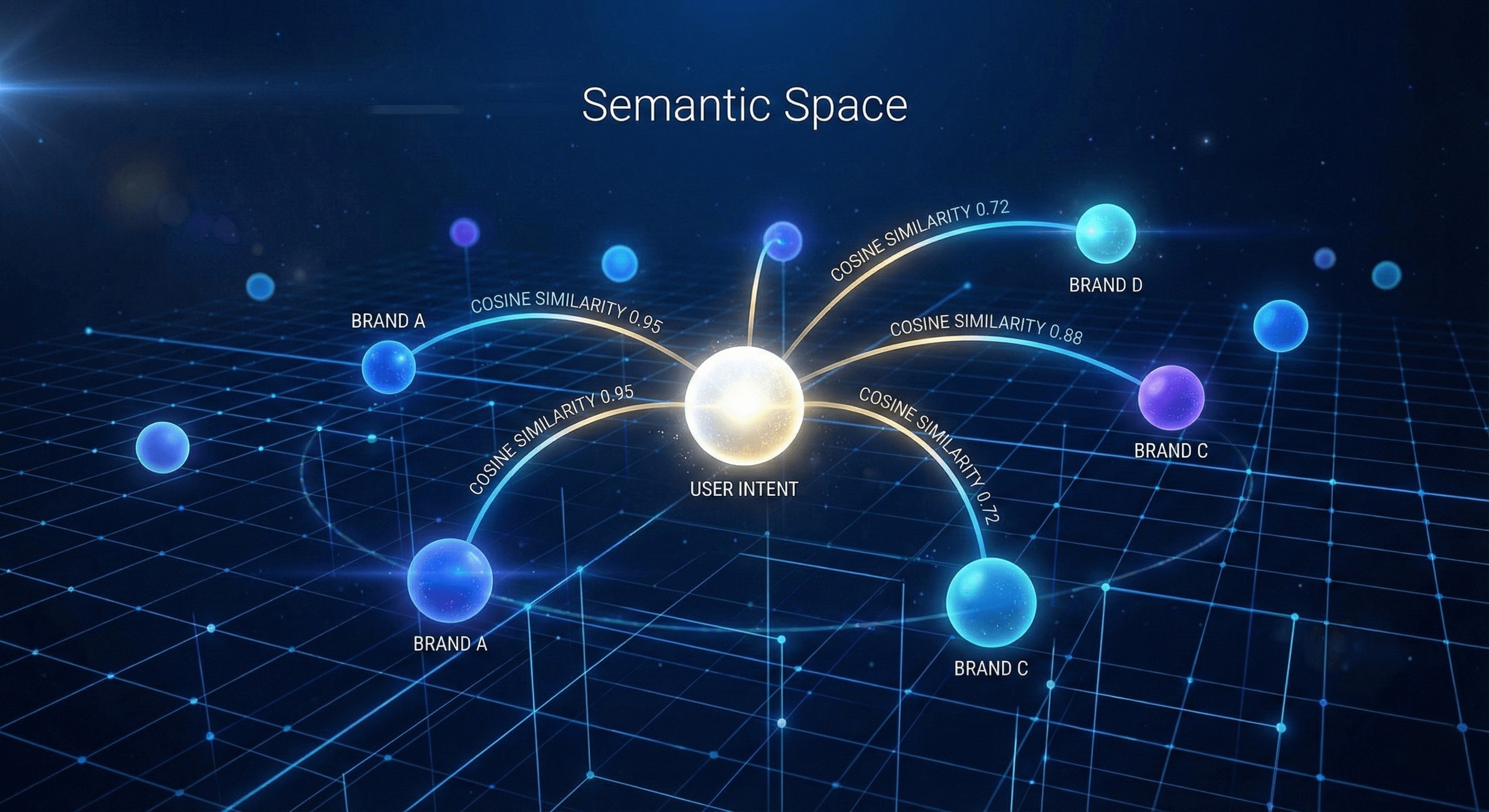

This is the most technically sophisticated layer of AI visibility tracking. LLMs process information as Vector Embeddings—numerical coordinates in a multi-dimensional "Semantic Space."

4.1 Calculating "Semantic Distance"

While we don't have the LLM's internal vector database, we can use Proxy Embedding Models (like OpenAI’s public text-embedding-3 or open-source equivalents) to simulate the model's logic.

The Measurement: We convert your brand's content into a vector and compare it to the vector of a user's prompt.

The Action: We calculate the Cosine Similarity. If your content is mathematically "too far" from the user's intent, the AI will likely ignore it during retrieval. Topify identifies this distance and tells you exactly what technical entities or facts to add to close the gap.

4.2 Knowledge Graph Synchronization

AI models cross-reference brand data across the global Knowledge Graph (Wikipedia, LinkedIn, official registries). Visibility software audits these "Nodes" to find signal conflicts. If your founding date or key features are inconsistent across the web, the AI perceives a "Trust Risk" and stops recommending you. This is essential for mastering entity SEO for AI visibility.

Comparison: Traditional Tracking vs. AI Visibility Probing

Understanding the methodological gap is critical for enterprise resource allocation.

Feature | Traditional SEO Tracking | AI Visibility Probing (Topify) |

Logic | Keyword Matching & Backlinks | Semantic Intent & Fact Density |

Tracking Source | Public Search Index (Google) | Simulated Multi-Model Outputs |

Data Nature | Deterministic (Static Rank) | Probabilistic (Share of Voice %) |

Primary Metric | Position (1-100) | Confidence Score & Citation Rate |

Retrieval Check | Googlebot Crawling | RAG Interception & Sniffing |

Optimization Focus | Click-Through Rate (CTR) | Trust Signals & Recommendation |

For a deeper look at the implementation of these metrics, see our guide on how to rank in AI Overviews.

Real-World Case Study: Reverse-Engineering an "Invisibility Gap"

To illustrate the effectiveness of this methodology, let's examine the case of AeroNet, a B2B connectivity provider.

6.1 The Situation

AeroNet ranked #1 on Google for "low-latency satellite internet for marine logistics." However, when users asked ChatGPT, "Which satellite provider is best for deep-sea oil rigs?", the AI consistently cited a newer competitor with lower domain authority.

6.2 The Topify Diagnosis

Using Topify, the brand ran a 5,000-prompt probing cycle. The software revealed:

The Vector Gap: AeroNet's content was too narrative ("We are leaders in connectivity"). The competitor used a "Fact-First" structure with technical tables showing latency in milliseconds.

The Sentiment Bias: The AI perceived AeroNet as a "legacy land-based provider" because their Knowledge Graph was outdated.

6.3 The Result

By refactoring their content based on these "Black Box" insights, AeroNet increased their AI Share of Voice in maritime prompts from 3% to 41% in three months. They didn't need internal access; they simply needed to observe and respond to the model's behavior. This represents the future of search engine optimization.

Strategic Outlook: Agentic Search and M2M Measurement

By 2026, the primary audience for your website will not be humans, but AI Agents. These agents will autonomously browse, compare, and recommend products to human users.

7.1 Measuring "Agentic Acceptability"

Visibility tracking is evolving to monitor Machine-to-Machine (M2M) Signals. This includes tracking how easily an AI agent can ingest your technical documentation, verify your pricing tables, and confirm your compliance without human intervention.

The New Metric: The Machine Readability Score. This measures the "friction" between an AI agent and your brand's data.

Frequently Asked Questions (FAQ)

8.1 Is brand visibility tracking accurate if you don't have an API from OpenAI?

Yes. Just as a meteorologist can predict the weather by observing atmospheric patterns without "owning" the sky, visibility software uses large-scale statistical sampling and behavioral simulations to achieve a 95%+ accuracy rate in predicting AI recommendations.

8.2 Why does my brand visibility change between ChatGPT and Perplexity?

Each model has a different "Retrieval Weight." Perplexity is highly "RAG-driven," prioritizing real-time facts on the web. ChatGPT relies more on its pre-trained weights and historical reputation. Topify measures these differences separately so you can tailor your content to each platform's logic.

8.3 Can a hallucination about my brand be tracked and fixed?

Absolutely. A hallucination usually occurs when an AI encounters conflicting or outdated data in the Knowledge Graph. By identifying the "source of friction"—such as an old press release or a forgotten LinkedIn profile—Topify helps you synchronize your signals to "force" the AI toward the truth.

8.4 How often should visibility be probed?

LLMs are updated and fine-tuned constantly. A competitor's new blog post or a model's fine-tuning can displace your citation overnight. We recommend a continuous monitoring cycle with Topify to ensure your brand's AI Share of Voice remains stable.

Conclusion: Mastering the Behavioral Web

You don't need to see the code to influence the output. AI visibility tracking is the Science of Observation. By utilizing synthetic probing, RAG interception, and semantic vector modeling, platforms like Topify provide the technical eyes and ears needed to navigate the probabilistic landscape of LLMs.

In the AI-first era, the winners will be the brands that move beyond the "Black Box" mystery and embrace the behavioral data that drives AI citations. By transforming your brand narrative into a verifiable, fact-dense knowledge base, you can ensure that you aren't just a result on a page—but the definitive answer to the user's inquiry.