The Era of Model Fragmentation: Why You Need a Rank Tracking Tool for LLMs

For twenty years, "Search" meant "Google." Tracking was simple because there was only one algorithm to please.

In 2026, the monopoly is broken. A software developer might search for code snippets in Claude 3.5 Sonnet; a consumer might ask ChatGPT-4o for gift ideas; and a researcher might use Perplexity Pro for citations. This phenomenon is called Model Fragmentation.

For marketers, this is a nightmare. Optimizing for one model is hard enough; optimizing for five different "black boxes" simultaneously feels impossible.

If you are only tracking one platform, you are ignoring vast segments of your audience. You need a rank tracking tool for LLM ecosystems—a platform that aggregates data from every major AI model into a single source of truth.

This guide explores the challenges of multi-model monitoring and how to use tools like Topify to build a unified GEO strategy framework that works everywhere.

The Challenge: Why One Strategy Doesn't Fit All

Why can't you just use your ChatGPT strategy for Claude? Because different Large Language Models (LLMs) have different "personalities" and retrieval architectures.

The "Conciseness" vs. "Nuance" Spectrum

ChatGPT tends to be direct and conversational. It favors concise answers.

Claude tends to be verbose and nuanced. It favors depth and safety.

Gemini is integrated with Google's ecosystem. It favors fresh, real-time data from Google Search.

A brand might rank #1 on ChatGPT because it has a clear, simple FAQ schema, but fail on Claude because it lacks deep-dive whitepapers. A robust rank tracking tool LLM dashboard visualizes these discrepancies instantly.

Differing Safety Alignment

We recently found that a cybersecurity brand was visible on ChatGPT but blocked on Llama 3 due to stricter safety filters regarding "hacking tools." Without a comprehensive generative engine optimization guide and a multi-model tracker, the brand would never have known they were invisible to millions of Meta AI users.

Hallucination Variance

An LLM might describe your pricing accurately in GPT-4 but hallucinate that you offer a "free tier" in Gemini. Monitoring these discrepancies simultaneously is critical for brand protection.

When evaluating software to manage this chaos, you need a "Single Pane of Glass." Here are the non-negotiable features for 2026.

The Unified "Visibility Score"

You don't have time to check five different dashboards. The best rank tracking tool for LLM platforms aggregates performance into a single "Global Visibility Score," weighted by the market share of each model.

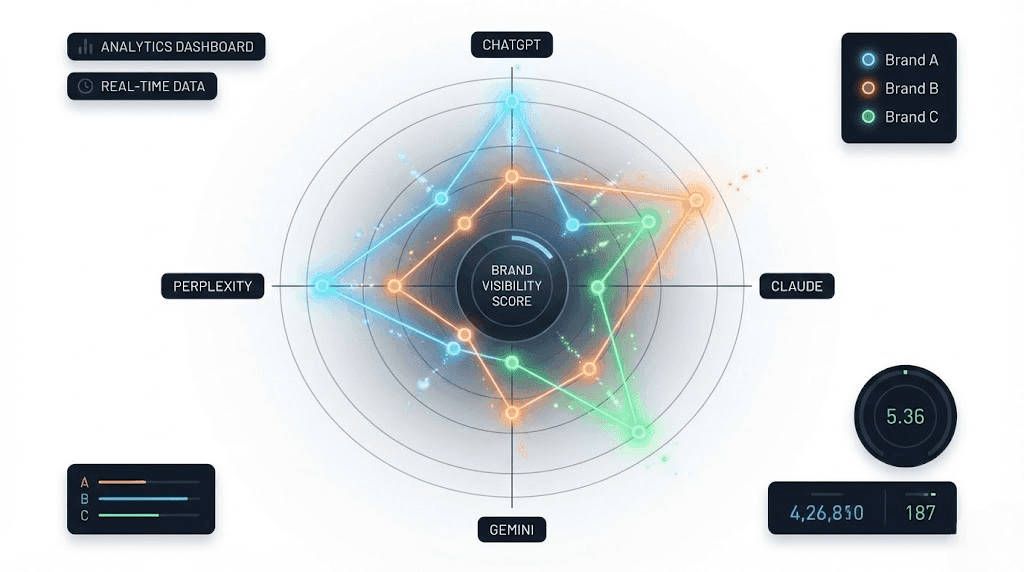

Head-to-Head Model Comparison

Can the tool overlay your visibility on ChatGPT vs. Claude on a single graph? Topify allows you to see: "My sentiment is rising on Perplexity but crashing on Gemini. Why?"

Cross-Model Hallucination Alerts

If three out of four models are accurate, but one is lying about your product, you need an alert. The tool should highlight the outlier so you can target that specific ecosystem with corrective content.

Aggregated Share of Voice (SoV)

Your boss wants to know: "Do we own the AI conversation?" Your tool must answer this by combining your SoV across all models into one metric.

To see which platforms offer these advanced features, check our review of evaluating AI search visibility tools.

Topify: The Ultimate Rank Tracking Tool for LLM Ecosystems

Topify solves the fragmentation problem. It is the only platform built with a "Multi-Agent Architecture," where distinct AI agents monitor ChatGPT, Claude, Perplexity, and Gemini simultaneously.

Why Topify is the Industry Standard:

Universal Dashboard: View your brand's performance across 10+ major models in one view.

Discrepancy Highlighting: Topify automatically flags when one model's output significantly diverges from the consensus (e.g., “Alert: Claude represents your pricing differently than GPT-4”).

Unified Sentiment Engine: It normalizes sentiment scores across different models so you can compare apples to apples.

Strategic Value: Topify enables "Polyglot SEO"—the ability to speak the specific language of each model. It tells you exactly what to tweak to win over Claude without losing your standing in ChatGPT.

For specific deep dives into individual platforms, see our guides on tracking ChatGPT, mastering Perplexity, and tracking Claude.

Strategy: How to Monitor Multiple Models Simultaneously

Don't let the data overwhelm you. Follow this workflow to manage multi-model tracking efficiently.

Step 1: Define Your "Model Mix"

Not every brand needs to track every model.

B2B SaaS: Focus on Claude and Perplexity.

B2C E-commerce: Focus on ChatGPT and Gemini.

Dev Tools: Focus on Llama and Claude.

Step 2: Set Up "Consensus Tracking" in Topify

Input your core prompts into Topify. The tool will run these prompts across your selected Model Mix. Look for the "Consensus Score"—are the models agreeing on who the market leader is?

Step 3: Isolate the Underperformers

If you are winning on ChatGPT but losing on Perplexity, dig into the specific AI search visibility metrics for that platform. Usually, this means Perplexity is citing a source that ChatGPT is ignoring.

Step 4: Execute Cross-Pollination

Take the content that is working on your best-performing model and adapt it for the others. If a whitepaper is driving citations in Claude, summarize it into a FAQ for ChatGPT.

Comparing Multi-Model Tracking Capabilities

Here is how the leading tools handle the challenge of fragmentation.

Feature | Topify | Profound | Semrush AI | Otterly |

Unified Dashboard | Excellent | Good | Separate tabs | Basic |

Model Coverage | 10+ Models | 5+ Models | Google Only | 2 Models |

Discrepancy Alerts | Auto-flagged | Manual check | No | No |

Sentiment Normalization | Yes | Yes | No | No |

Price | Mid-Range | High | Mid | Low |

The Future of Rank Tracking Tool LLM Tech: The "Model Router"

As we move toward late 2026, we expect search interfaces to become "Model Agnostic." Users will type a query, and a "Router" will decide which AI model answers it.

Future rank tracking tool LLM software will need to track this "Routing Layer." Topify is already developing algorithms to predict which model will be called for a specific query type (e.g., routing math questions to o1, creative questions to Claude).

To stay ahead of these infrastructure changes, subscribe to our definitive blueprint for GEO.

Conclusion

The fragmented search world is only getting more complex. Apple Intelligence, Meta AI, and Amazon Olympus are entering the chat.

If you are trying to check these platforms manually, you have already lost. You need a centralized rank tracking tool for LLMs that brings order to the chaos.

Topify gives you that single pane of glass. It turns the noise of multiple models into a clear signal, helping you optimize your brand's presence across the entire artificial intelligence landscape.

FAQs

Can't I just optimize for ChatGPT and assume it works for others?

No. This is a dangerous assumption. Our data shows a correlation of only 60% between ChatGPT rankings and Claude rankings. Each model has different training data and safety filters. You must use a rank tracking tool for LLM diversity to catch these gaps.

How does Topify normalize sentiment across models?

Claude is naturally more polite; ChatGPT can be more salesy. Topify uses a calibration layer to adjust the sentiment scores, so a "90/100" on Claude means the same thing as a "90/100" on ChatGPT.

Does monitoring multiple models cost more?

With most legacy enterprise tools, yes. However, Topify offers bundled model packages, making it cost-effective to track the "Big 4" (GPT, Claude, Gemini, Perplexity) without quadrupling your budget.

What is "Model Hallucination Rate"?

This is a metric tracked by Topify that shows how often a specific model makes up false information about your brand. If Gemini has a high Hallucination Rate for your industry, you know to focus your defensive SEO efforts there.

How do I start tracking Llama 3 or open-source models?

Most standard tools don't track open-source models. Topify connects to hosted versions of Llama 3 (via Groq or AWS Bedrock) to provide visibility data even for these decentralized models.